How to setup Data Quality Rules?

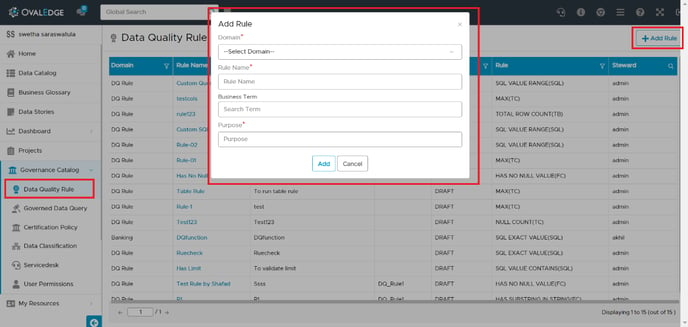

To create a data quality rule in the data quality rule summary page, go to

Governance Catalog > Data Quality Rule and click the +Add Rule button to create a new data quality rule.

The data quality rule page opens to define all the parameters.

- Domain - Select the Domain name from the business term list.

- Rule Name - Name that identifies the rule to be written.

- Business Term - The term that governs the data quality rule.

- Purpose - Description defining the need for this data quality rule.

We are only collecting high level details of Data Quality Rule while adding rules. Type of Rule, Rule object is collected within the detail page.

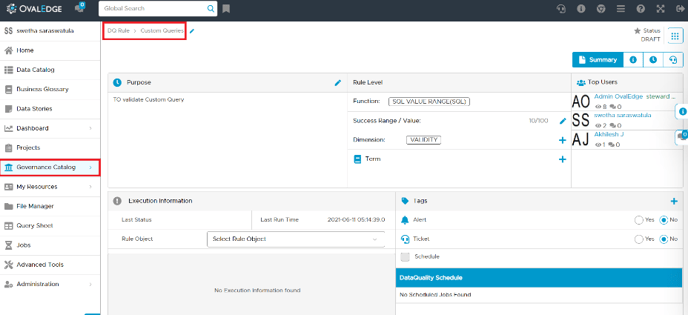

- Select the newly added rule (under the Rule Name column) from the summary page if the Data Quality Rule is created with the details mentioned above.

Edit a few or all the following to define the rule conditions:

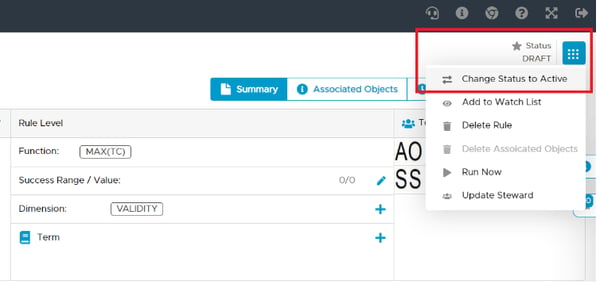

The rule editor can do any of the following when the rule is in DRAFT state as shown above under Status (top right corner).

- Edit the Purpose if more description is required to be added.

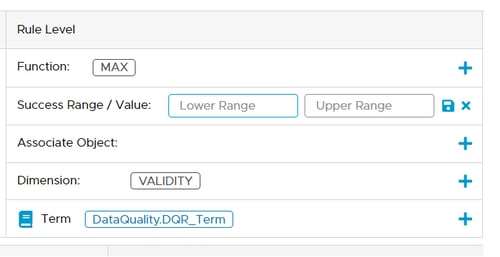

- Under ‘Rule Level’: we have Functions, Dimensions, and Success Range / Values.

Rule Level fields

Let us know more about Rule level fields in detail.

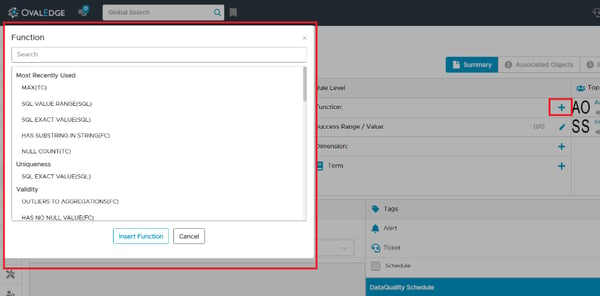

1. Functions

Each function has an execution mechanism & it can be associated with a certain kind of object. OvalEdge application comes with the following default Functions:

- Table Functions:

- DENSITY

- NULL DENSITY

- TOTAL ROW COUNT

- Table Column Functions:

- AVERAGE

- DISTINCT

- MIN

- MAX

- NULL COUNT

- STD DEVIATION

- SUM

- TOP VALUES

- SQL Functions:

- SQL EXACT VALUE

- SQL VALUE CONTAINS

- SQL VALUE RANGE

Note: Most used functions are considered based on the maximum number of times that a Function is used in creating Rules. The system will allow you to choose the Object (Table/ Column/ SQL/ File Column) based on the selected function.

Point to be noted

The application comes up with default functions with suffix as shown below:

| Suffix | Object Type |

| FC | File Column |

| SQL | SQL Query |

| TB | Table |

| TC | Table Column |

If we want to create a Data Quality Rule for the file column, we need to select a function with the suffix (FC). Please refer to the above table for understanding the suffix for different object types.

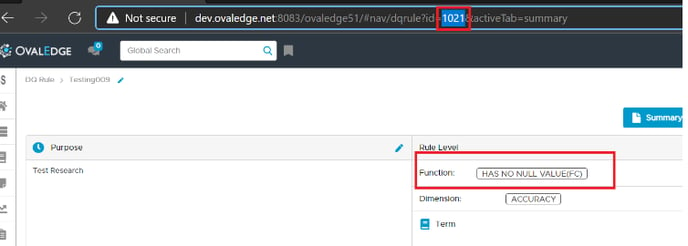

Example: Creating a DQR for file column to check null values. The above steps remain the same, and while selecting the function, we need to select ‘HAS NO NULL VALUE(FC).’ Other attributes remain the same.

Please see this article Data Quality rule types for File columns to know more about the types of Data Quality rules existing for the file columns.

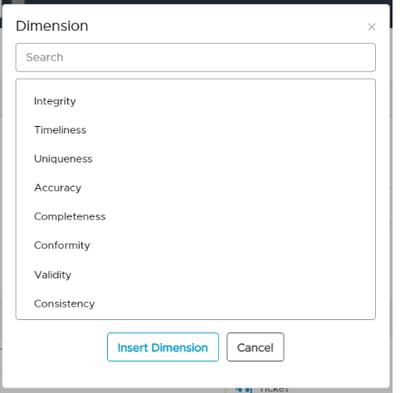

2. Dimensions

Functions are categorized into Dimensions for Reporting purposes. Each Function is associated with a dimension, and users will have the ability to change it. After creating a Rule with a Function (having a Dimension), we calculate Data Quality Index for each Data Object based on the number of Rules, successful and failed executions, no. of tickets reported, and the number of tickets resolved.

In the Data Quality index page, these Dimensions are used to show the completeness of an object against each Dimension.

3. Success Range / Value

Some Functions take values in Range and some take in percentages. For example, the MAX function can be successful if the Max value of the column(order) is between 20000 to 25000.

The NULL COUNT function can be successful if the null count of a column(address) is between 2% to 5% of the total rows.

- Add Terms / Tags where the history/audit is displayed as shown below:

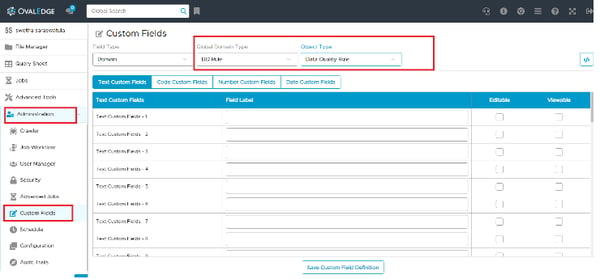

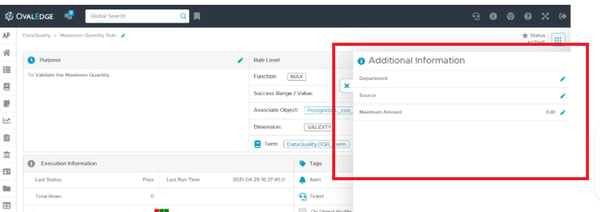

- We can add Additional Information to a Rule using Custom Fields.

(Administration 🡪 Custom Fields).

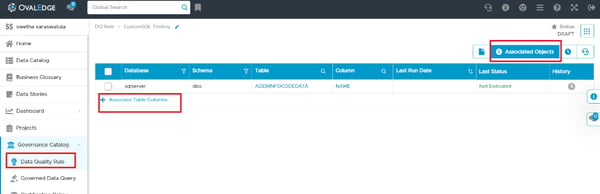

- Click on ‘Associate Table Columns’ under ‘Associated Objects’ to apply rules to some or all the objects.

- Users can apply the rule to any associated data object by selecting the checkbox corresponding to the data object.

- Users can delete the rule applied to added data objects by selecting the nine dots.

Point to be noted

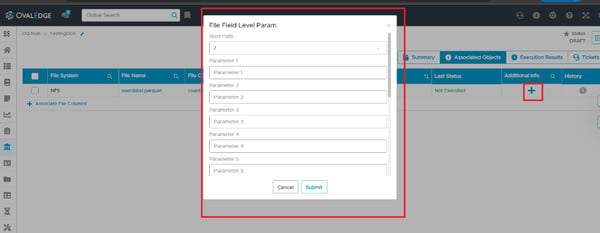

If we are creating a Data Quality Rule for the File column, then additionally, we can add ‘Additional Info’ with respect to field level parameters in a file as shown below.

- Additional details can be added to the data quality rule,

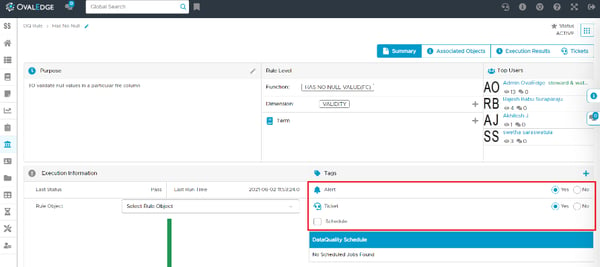

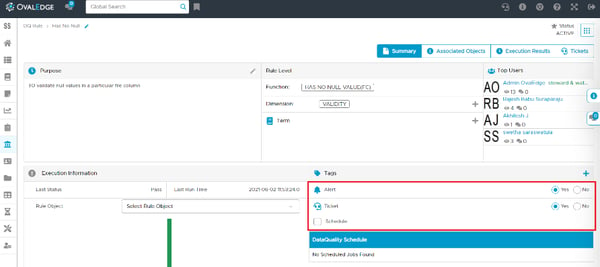

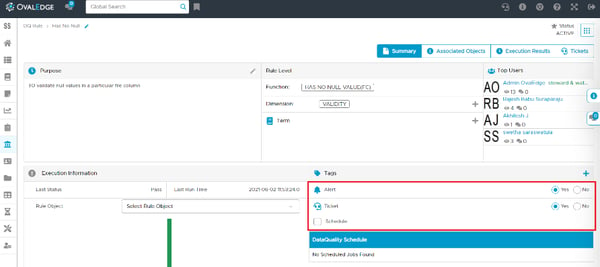

- If data FAILS to pass the quality rule, the system can notify an ALERT to owners and stewards of the assigned object.

- If data FAILS to pass the quality rule, the system can notify it to raise a data quality service desk TICKET to its owner and steward of the assigned object.

- The user has the option to execute the rule when the data object is profiled or can schedule a check for Every Hour/Day/Week/Month/Year at a specific time.

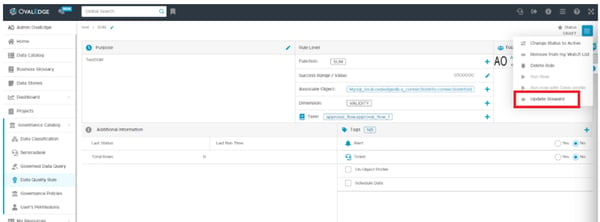

- Change the Steward of the Rule from (9 dots) on top right corner.

- Once the modifications are done, select the nine dots and change the status to ACTIVE.

- The defined data quality rule is created and displayed on the Data quality rule summary page.

Viewing details of the Data Quality Rule

Users can view the individual Data Quality Rule details by clicking a Data Quality Rule listed from the Summary page. The details of the selected Data Quality rule can be viewed on this page.

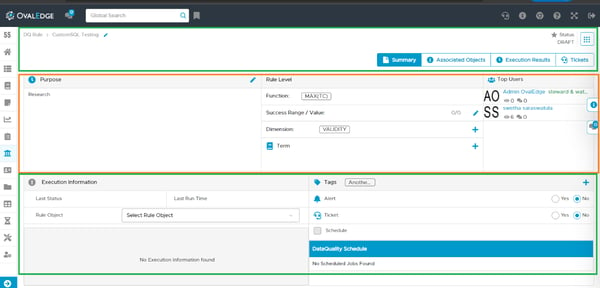

- The top section displays the Status, Summary, Associated Objects, Execution Results, and Tickets tabs.

- The middle section of this page displays the purpose, Functions, Top users, etc.

- The bottom section displays the Tags, Alerts, and Job Schedules on these DQR rules.

displays any Additional information which we are getting it from Custom Fields from the Administration section.

displays any Additional information which we are getting it from Custom Fields from the Administration section.

Executing a data quality rule

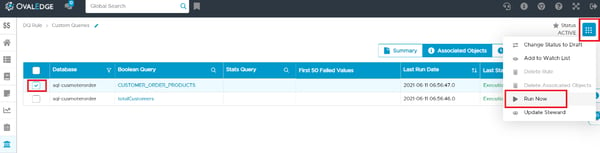

Status must be ACTIVE to run the data quality rule.

Data quality rules are by default executed whenever the data object associated with the data rule is PROFILED. Alternatively, the data quality rules can be executed in four different ways.

1. Run Now

Using nine dots, run at any point of time, the rule will use the profiling information if the table has been profiled; otherwise, it will query the source system to execute the rule.

2. Schedule

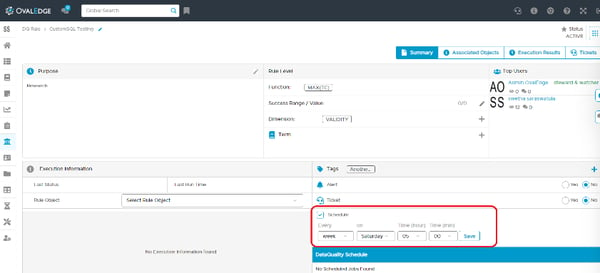

We can schedule the Rule to run at a specified time. It does the table profiling at the run time and executes the rule with the profile information.

To set a specific time for executing the rule, select the checkbox next to the scheduled date. Choose the interval and time at which the data quality rule should be executed.

Example: The Data Quality rule is scheduled to run at Every WEEK on SUNDAY at 05 HOUR and 30 MINUTES.

- The Schedule can be edited only when the Data Quality Rule is in DRAFT status. To edit a Data quality rule, click the nine dots and change the status to DRAFT.

3. Advanced Job – (Only for FILE COLUMNS)

Apart from the above two mechanisms, we can also run an advanced Data Quality Rule job for the file columns.

Process to run advanced DQR job for file columns:

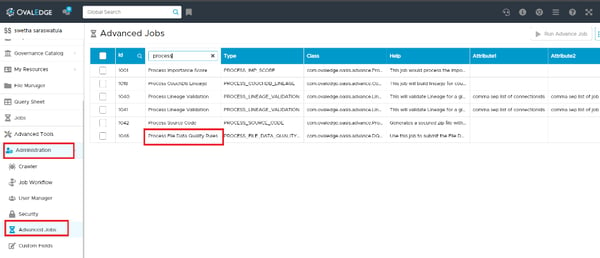

- Select the job name: ‘Process File Data Quality Rules’ from Administrator 🡪 Advanced Tools as shown below:

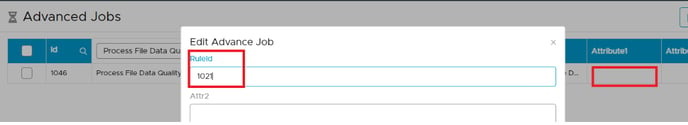

- Update the Job details under ‘Attribute1’ with Rule Id.

- If the value of

- Rule Id = -1, the Advance job will run on the all the active file field DQRs

- Rule Id = DQR Id, advanced job will run only for that rule. We get the data quality rule id from the URL as shown below:

- Update the Job details under ‘Attribute1’ with Rule Id.

- Select an Advance job and click on Run Advanced Job.

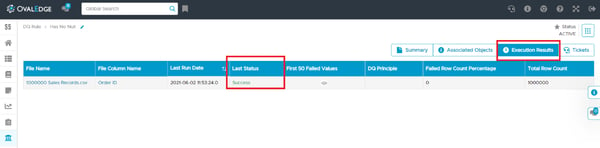

- To view the execution results of a data quality rule, click on the Execution Results Tab as shown below:

Notifying users when data quality rule fails

When a data quality rule fails, the rule editor can set an ALERT message to the STEWARD of the Data Quality Rule to act. Also, suppose data FAILS to pass the quality. In that case, the system can automatically raise a service desk TICKET and send a notification message to the assigned STEWARD of the DAG at the schema level.

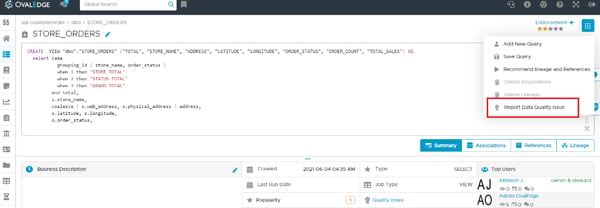

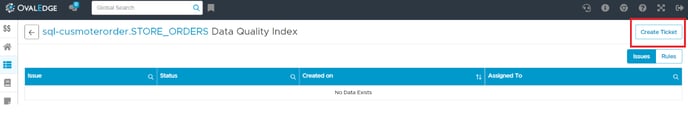

Data Quality Index

In Data Catalog, If we want to raise any data quality issue at table, column, file, file column level, we can directly click on 9 dots on the top right corner and select ‘Request Data Quality Issue’ which will route to ‘Data Quality Index’ page.

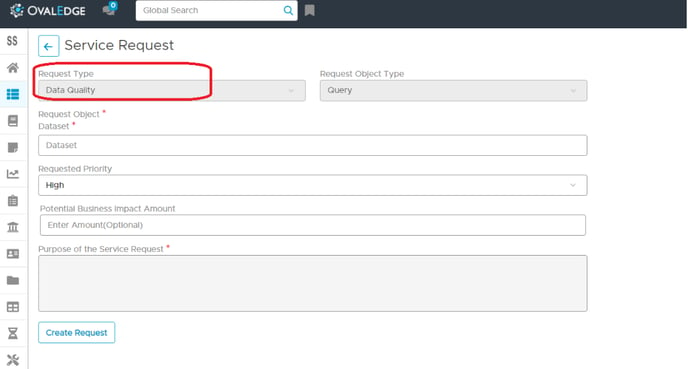

We can manually raise a ticket by clicking on ‘Create Ticket’, it will navigate to the service desk's new request create page.

Deleting a data quality rule

You can remove the Data Quality Rules that are no longer needed. To delete a data quality rule,

- Select and click a data quality rule name.

- Click the nine dots and choose the Delete Rule to delete the selected rule.

- The selected rule gets deleted.

Additional Information

- The file must be crawled & profiled before creating a Data Quality Rule for the file column.

- Data Quality Rule for Table, Table Columns can be run multiple times in a day, but the File Data Quality rule (FDQR), can run once a day (or) when new data is added.

- When a rule is run on a file column, all the rules applicable for any of the columns in the file are also run. This is to optimize the process of downloading and parsing the file content, which is an expensive process in terms of time.