Connectivity Summary

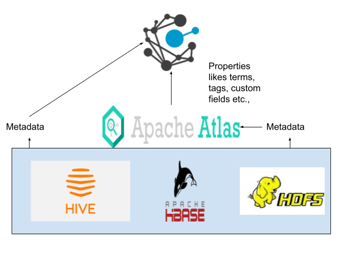

An out of the box connector is available for Apache Atlas. As Atlas is also a Data cataloging tool we are trying to pull all the properties assigned to metadata. We will be pulling in-built lineages from Atlas.

Rest API is used to crawl Atlas

Technical Specifications

The connector capabilities are shown below:

Crawling

| Feature | Supported Objects | Remarks |

| Crawling | Schemas |

Business Description, Terms, Tags, Custom Fields |

| Tables |

Business Description, Terms, Tags, Custom Fields and Lineage |

|

| Columns |

Business Description, Terms, Tags, Custom Fields |

|

| Files |

Business Description, Terms, Tags, Custom Fields and Lineage |

Lineage Building

| Lineage Entities | Details |

|

Table lineage |

Pulling the Lineage if exists on premise |

|

Column lineage |

Not Supported |

Lineage process

The following are the steps to display Lineage which is pulled from Atlas.

- Make sure you have successfully crawled both Hive (Example) and Atlas connections.

- In the crawler page, select the atlas connection for which we want to pull the lineage and select the 'Build lineage' option in 9-dots.

We will be redirected to the build lineage page with the data sets available. Select the dataset for which you want to build lineage and click on process lineage in 9 dots. - A job will be initiated to map the objects and ensure the job status in jobs page.

- After the job is successfully executed, move the Data Catalog and open the table for which we have built the lineage and select the lineage tab to view the Lineage.

Profiling and Querying are NOT supported.

Pre-requisites

To use the connector, the following need to be available:

- Before connecting to the Atlas connector, we need to connect to the Hive / HDFS connection on which the Atlas is built.

- Crawl the Hive / HDFS connection and get the metadata fields such as Schema names, table names, columns etc.

- Make sure you have a connection ID of the above connection and the connection is crawled.

- While creating Atlas connection, make sure you give proper connection details with connection information id on the above step.

- An admin / service account, for crawling . The minimum privileges required are:

| Operation | Access Permissions |

| Connection validate | R - Read access |

| Crawl schemas | R - Read access |

| Crawl Tables | R - Read access |

Connection Details

The following connection settings should be added for connecting to a Atlas Data Governance tool:

- Database Type: Atlas

- Connection Name: Select a Connection name for the Atlas database. The name that you specify is a reference name to easily identify your Atlas database connection in OvalEdge. Example: Atlas Connection DB.

- License Type:

- Standard: Lineage will not be pulled

- Auto-Lineage: Lineage will be pulled if exists

- Atlas URL: The server or IP with port number on which the atlas is running.

Example: http://18.220.154.229:31000

- Username: Username with which the user wants to access Atlas with minimum crawling permissions.

- Password: Password to authenticate

- HDFS Connection ID: HDFS Connection info id of the connection for which we are trying to get the properties.

- HIVE Connection ID: HIVE Connection info id of the connection for which we are trying to get the properties.

Crawler Setting Options are disabled for this connection.

Points to note

- We are using APIs to get the JSON (properties) from Atlas server.

- We have GUID for every object in Atlas and we are storing the details of objects received in Atlas_Audits table.

- We will be storing the JSON lineage data in both source code and Data set tables.

- We have a separate class for Atlas Lineage implementation.

- After crawling both Hive and Atlas, no need for connection to Atlas to build lineage.

- We bring datasets related to lineage at the time of crawling Atlas.

- Crawling will be done hierarchically,

Schema1 → Table1 → Column1, Column2… → Table2 → Column1, Column2… → Schema2…

FAQs

-

Is Hive mentioned in the Data source and Atlas hive different?

Yes, for atlas crawling first we need to connect to the hive database(Same cluster on which Atlas is mounted) . So that the sync will happen when we provide connection info id in atlas connection details and the properties will be mapped correctly.

-

What are the mapped from Atlas to Ovaledge?

At the time of crawling, the following details will be fetched from the Atlas:

-

Object name : mapped to entity (Table name/ Column name/ Schema name/ File name)

-

Object type : mapped to Object Type (Table/ Column/ Schema/ File)

-

Labels : mapped to OE Tags

-

Glossary : mapped to OE Business Glossary and Terms

-

Description : mapped to description

-

Classification : mapped to Custom fields

-

Business Metadata : mapped to Custom fields

-

User defined Metadata (Custom Attributes) : Mapped to Custom fields

-

Lineage : Mapped to Lineage

-